-

Guide

Tags

API

What's new

guide

- Introduction

- FAQ

- Vocabulary

Platform

- Projects

- Import & Export

- Data Management

- Labeling Configuration

- Labeling Interface

- Machine Learning

Process

- Statistics

- Machine Learning Backends

- Verify and Monitor Quality

People

- User Accounts

- Guide for Annotators

- Organizations

- Teams

Various

- Activity Log

- JavaScript SDK

- Embed Annotation

- On-Premise Setup

- On-Premise Usage

Verify and Monitor Quality

This documentation describes Heartex platform version 1.0.0, which is no longer supported. For information about reviewing annotations and monitoring annotation quality in Label Studio Enterprise Edition, the equivalent of Heartex platform version 2.0.x, see Review annotations in Label Studio.

Reviewing completions and labels is an essential step in the process of training data management to ensure only the highest quality data is going to train your machine learning model. Without reviewing the quality of your labeled data, you risk letting weak annotations slip through the cracks, which can degrade the performance of your model.

Review Completions

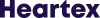

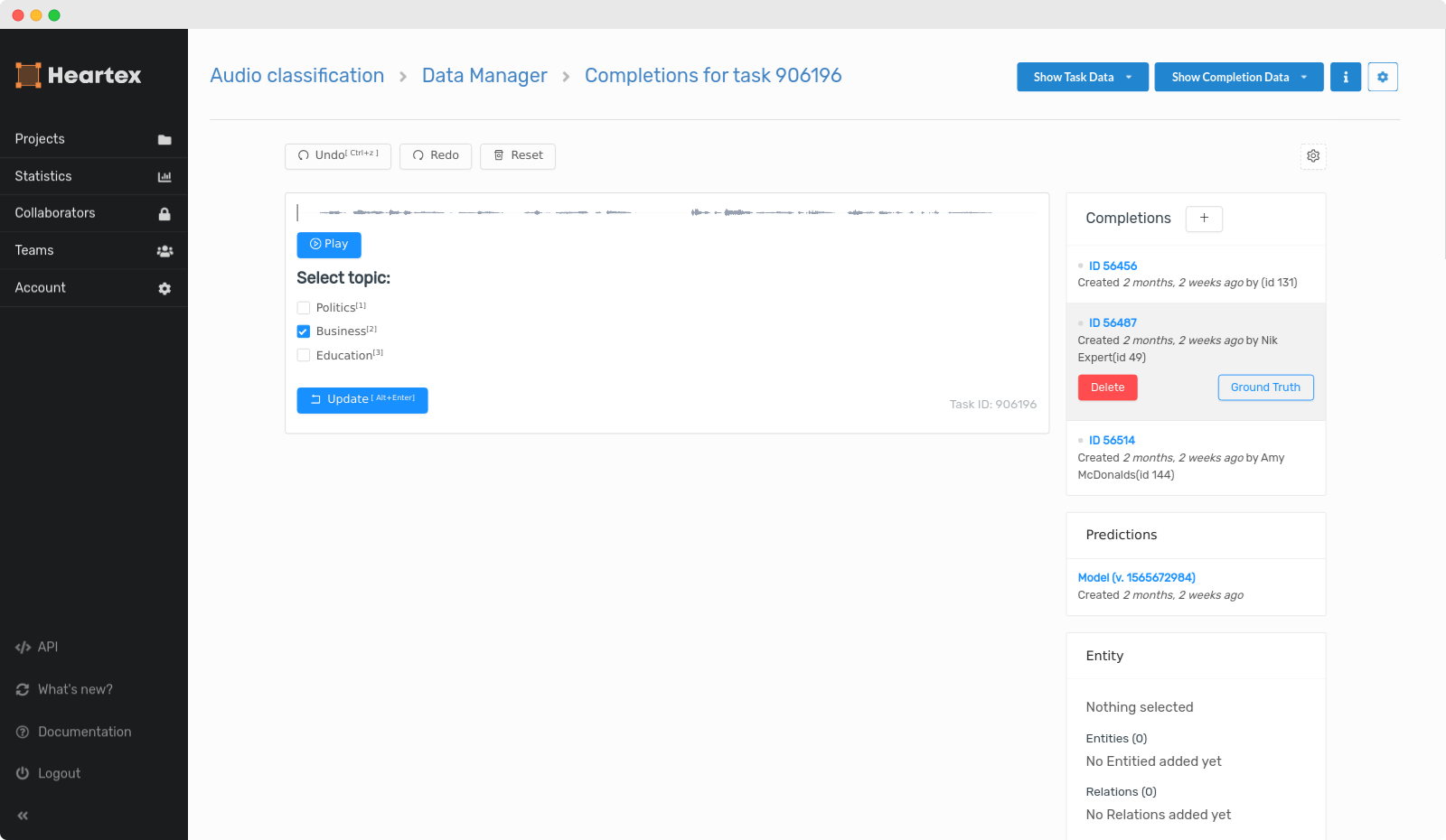

Reviewing completions and labels starts from the data manager. From here, you can view all items in your dataset and explore individual completions.

Modifying a completion

Modifying/editing an existing label is the same environment as the labeling environment. You click on the completion you want to review, and the labeling environment will be available to make any necessary improvements to the annotations.

Choosing what to review

There are different ways to prioritize which tasks to review. You can order tasks in the data manager based on who the annotator is or based on the Consensus score, or model confidence score.

Delete & Requeue

You can choose to delete a completion and put back the task into the labeling queue by removing one or more completions and making it non-finished.

Modifying Completions

You can create a new completion by clicking the Plus icon inside the editor as well as remove any completions.

Configuring Agreement

The agreement enables you to measure the quality of your training data quantitatively — this is important because high-quality training data leads to performant AI. The agreement works by having more than one annotator (human or machine) label the same asset (image, text, audio, video, etc.). Once an asset has been labeled more than once, the results can be compared quantitatively (by using an equation), and a consensus score is calculated automatically. Consensus works in real-time so you can take immediate and corrective actions towards improving your training data and model performance. The primary setting for the agreement is the number of completions you need to get from unique labelers. For example, if you set it to 5, then Heartex expects the same amount to be labeled by five different collaborators/team members.

Individual Item Consensus

The consensus of each item from the dataset can be viewed inside the data manager.

Ground Truth

Ground Truth items is a quality assurance tool for training data. Training data quality is the measure of accuracy and consistency of the training data. Completions from each annotator and the model are compared against their respective ground truth completion, and an accuracy score between 0 and 1 is calculated. Use Ground Truth to ensure the labeling team is accurately labeling data initially, and throughout the lifecycle of the training data.

To create new Ground Truth items, go into data manager, and open individual tasks. Create a new completion or set an existing one and click on Ground Truth button. You can also directly click on the Star icon in the data manager next to a task. In case there are multiple completions inside a task, only the first one will be set as a ground truth.