[v1.2.1] 15 July 2020

Introducing Organizations

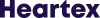

We reworked team logic and created organizations combining members and teams. You can Invite Members to your organization and build teams from Organization members. Each member has a role in the organization and it’s defined across all the teams. You can change roles on the organization page.

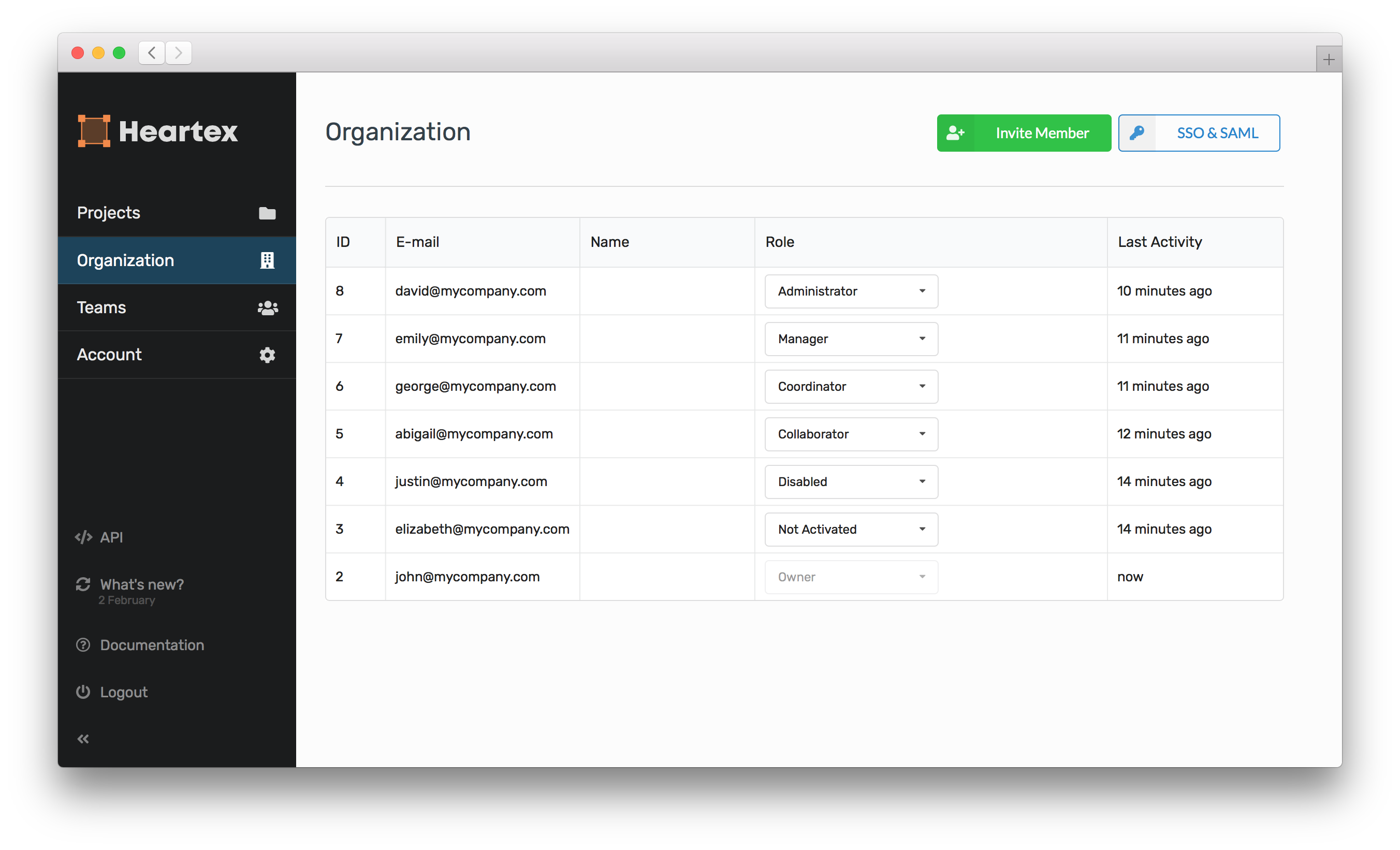

Single Sign-On via SAML

Organizations can have Single Sign On (SSO) via SAML protocol. Now you can sync your users from Microsoft Active Directory, OneLogin and other SAML supported services.

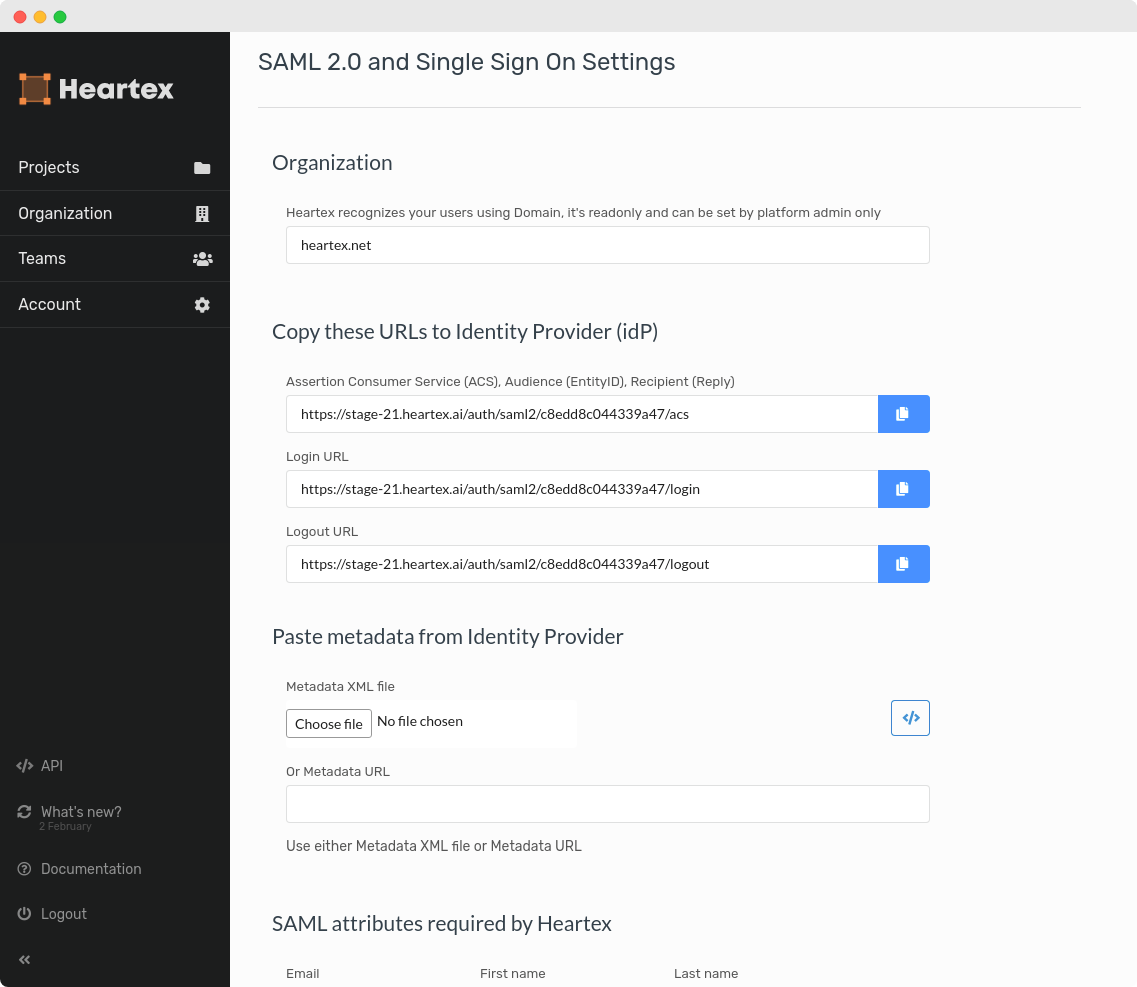

S3 Cloud Storage

The platform includes S3 cloud storage support: just setup S3 in the project settings and tasks will be imported automatically.

Nested Labeling

Nested labeling enables you to specify multiple classification options that show up after you’ve selected a connected parent class

It can match based on the selected Choice or Label value, and works with a required attribute too, smart selecting the region that you’ve not labeled. To try it out check Choices documentation and look for the following attributes: visibleWhen, whenTagName, whenLabelValue, whenChoiceValue.

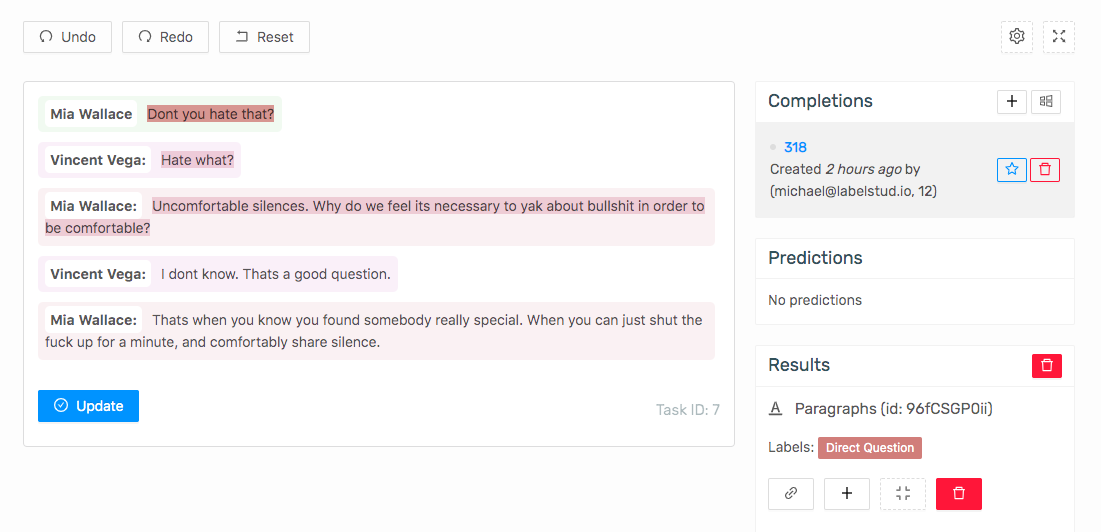

Labeling Paragraphs and Dialogues

Introducing a new object tag called “Paragraphs”. A paragraph is a piece of text with potentially additional metadata like the author and the timestamp. With this tag we’re also experimenting now with an idea of providing predefined layouts. For example to label the dialogue you can use the following config: <Paragraphs name=“conversation” value=“$conv” layout=“dialogue” />

Verify stream

Use Verify Stream to ensure your completions are correct. Go to the data page and press the “Verify” button to start Verify stream. Thumb up or down what you see. These scores will be accessible with the export of tasks. Check the summary about verification on the project dashboard.

More approaches for agreement calculation

New matching functions for annotator agreement calculations with iou_threshold for all tags are added.

Per-label annotators agreement

The per-label & per-choice annotator agreement matrix is a precise way to analyze annotator’s performance and errors.

Conditional and nested labeling

Labeling interface enables the support of conditional and nested labeling. See example below:

Per region labeling

The per-region labeling is a very necessary and important feature. Check how it performs:

Note: Agreement evaluation for the per-region labeling is supported in the platform too.

Tag Text loads external resources

Use labeling config like this <Text value="$text" valueType="url"> to load text files from external hosting.

Renaming HTMLLabels => HyperTextLabels

Please, use HyperTextLabels instead of HTMLLabels.

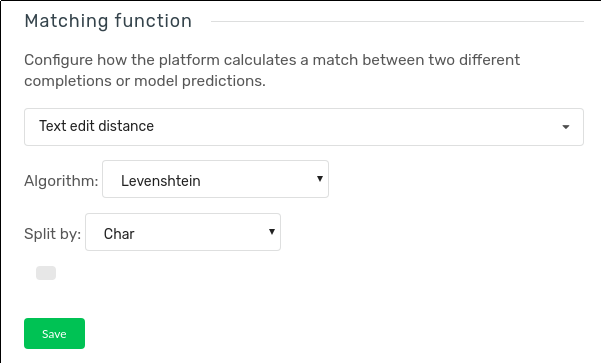

TextArea matching with different distances

TextArea control tag allows computing various statistics (task agreement, collaborator agreement matrix, prediction accuracy) based on fine-grained text edit distances (Levenstein, Hamming, etc,) with different text granularity level (split by char or words)

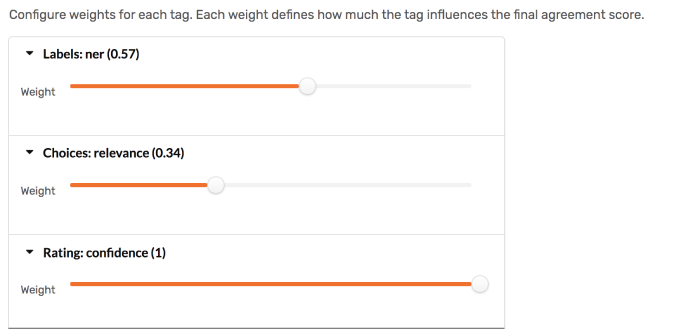

Control weights for tags

In case when you have multiple control tags - you can adjust the specific weights to each tag. Each weight defines how much the tag influences the final agreement score.

Save project as user’s templates

Save instruction, project config, and other project parameters as user’s templates and create a new project from your templates.

Previous button on label stream

Move backward over tasks while int the labeling stream.

Agreement method selector

Now you can customize an algorithm for annotators agreement calculations. Several choices are available:

- Single Linkage - group annotator results by single linkage aggregation method

- Complete Linkage - group annotator results by complete linkage aggregation method

- No Grouping - averaging annotator matching scores without grouping

Overlapping cohort & sampling

Ability to specify a cohort of tasks that should be annotated by multiple users, and sampling methods related to them